Clean Data, Smarter AI: Why Generative AI Fails Without a Data Cleaning Revolution

By GAPx | July 2025

Everyone wants to talk about the models. GPT-5. Claude 3. Gemini. The excitement is justified, Generative AI is redefining how we work, build, and make decisions. But if your data is dirty, no amount of AI horsepower will save you. Poor data quality quietly sabotages GenAI from the inside out, eroding trust, distorting insights, and rendering innovation pipelines ineffective.

This isn’t just a data science problem. It’s a boardroom problem, a product problem, and a private equity problem. Because the ROI of GenAI doesn't come from shiny dashboards, it comes from how well your foundation is built. That foundation is enterprise data. And unless it’s clean, structured, and reliable, your AI investment is just automation theatre.

The Illusion of Progress: When GenAI Fails Quietly

Most businesses deploying GenAI tools are already generating content, analysing customer behaviour, or accelerating workflows. But many are learning the hard way that garbage in still means garbage out - except now it’s garbage dressed up in perfect prose or predictive confidence.

The issue? Generative models are hyper-sensitive to the private, internal data they’re trained on or prompted with. Small inconsistencies in formatting, missing values, duplicated records, or unclear naming conventions can cascade into:

Hallucinated outputs

Broken downstream workflows

Misleading personalisation

Biased decisions

Compliance and privacy risks

Worse still, many organisations don’t realise they have a data quality problem until it’s surfaced through a GenAI error that reaches a customer, investor, or regulator.

According to a 2025 McKinsey report, “Only 15% of companies deploying GenAI at scale have a dedicated data quality programme in place”, yet these are often the firms seeing the greatest ROI. (source)

Why Data Cleaning Is Different In The GenAI Era

Traditional data cleaning (removing duplicates, correcting typos, reconciling formats) was once viewed as tedious but manageable. GenAI changes the stakes:

Scale: LLMs consume vast and varied enterprise datasets. A single anomaly can influence thousands of outputs.

Context: Generative models don’t just “compute”, they interpret. Bad metadata or inconsistent labelling changes the meaning of your inputs.

Trust: When AI writes emails, makes predictions, or suggests diagnoses, users expect it to be right. That’s a much higher bar than typical BI dashboards.

In short: GenAI doesn’t just automate your outputs. It amplifies your inputs. That makes rigorous, structured, and continuous data cleaning not just helpful, but mission-critical.

A 2025 Data Cleaning Playbook For GenAI

Cleaning company-owned data for GenAI is not a one-time fix. It’s an ongoing strategy with a mix of automation, process, and human judgement. Here’s how leading firms approach it:

1. Data Profiling: Know Thy Data

Before you clean, you need to understand what you’re dealing with. Leading companies use data observability and profiling tools to:

Detect null values and schema drift

Spot duplicates and outdated records

Surface broken joins and orphan fields

Tools like Monte Carlo and Bigeye help teams monitor real-time data quality issues, while Alation supports metadata discovery and lineage mapping (Alation, 2025).

Engage with stakeholders to discover problem areas and evaluate how substandard data quality affects their productivity. Data profiling is no longer a back-office function. It’s the frontline of GenAI risk mitigation.

2. Standardisation and Structure

Generative AI thrives on predictable structure. But that doesn’t mean every field must be rigidly formatted.

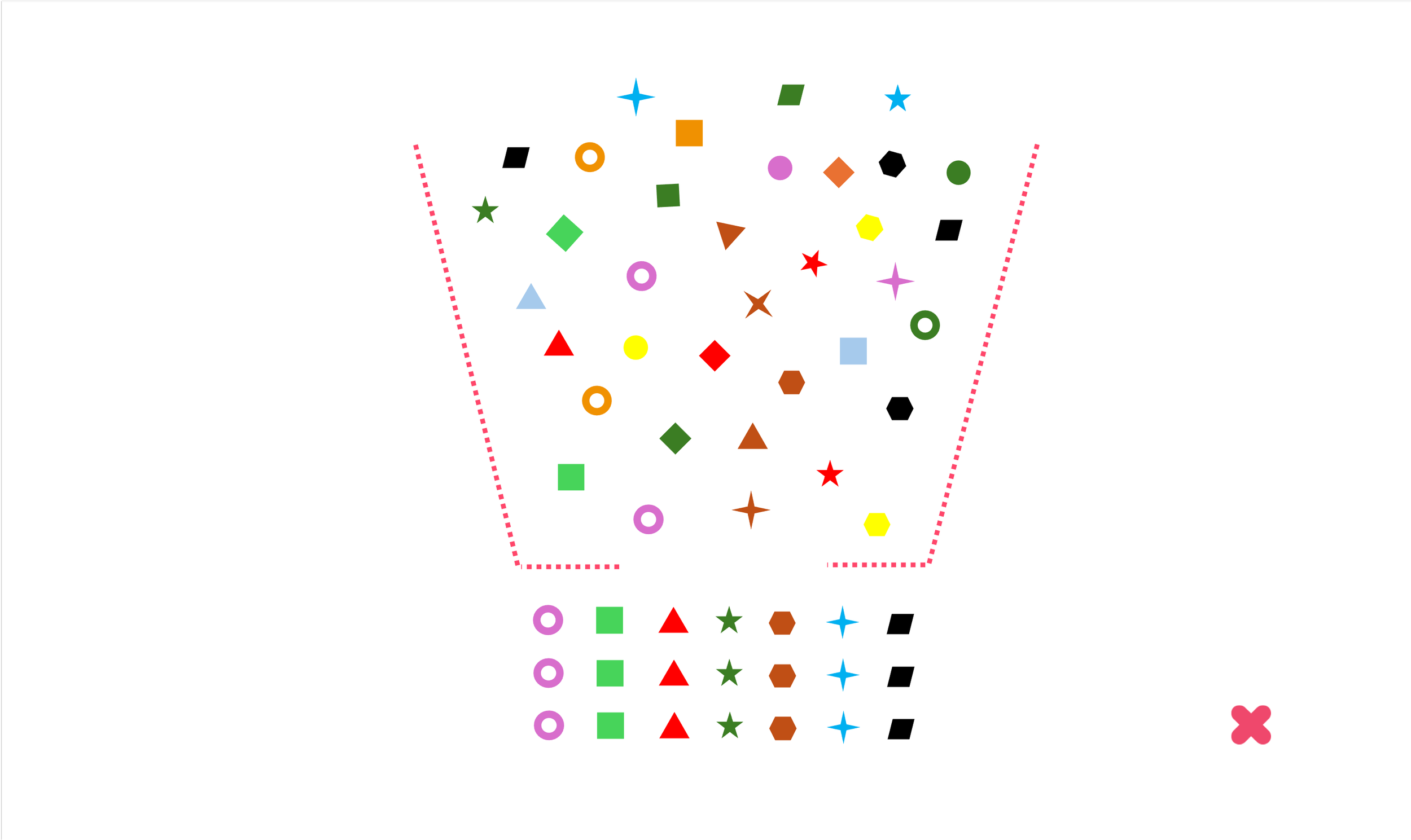

GAPx recommends using a traffic light model (Red/Amber/Green) to triage which fields require strict standardisation:

Red: Critical fields (e.g. customer IDs, currency codes) where inconsistency breaks logic

Amber: Important fields (e.g. job titles, timezones) where variation is manageable but should be flagged

Green: Flexible fields (e.g. notes, open text, loosely structured dates) where GenAI can still reason effectively

This approach avoids premature over-engineering while keeping key pipelines intact. For example, while GenAI can handle varied date formats, inconsistencies can still introduce misinterpretation or reduce downstream reliability.

3. Handling Missing or Messy Data

Messy data can mislead GenAI, especially when meaning is inferred. Leading practices include:

Mean/median imputation for numerical gaps

Rule-based flags for contextually relevant blanks

AI-powered cleaning using tools like Numerous.ai or Scrub.ai, which can auto-detect anomalies and generate cleaning scripts (Analytics Vidhya, 2025)

Don’t fill for the sake of completeness. Understand what missing data says about behaviour or system design.

4. Validation Against Business Logic

Your data isn’t clean until it’s true to your business. This is where domain expertise becomes non-negotiable. Validation should:

Confirm data against known rules (e.g. invoices can’t have future dates)

Match categories to standard taxonomies

Identify gaps in CRM tiering, campaign tracking, or support histories

Business logic often resides in people, not platforms. Make sure your AI understands both.

5. Augmenting and Enriching Data

Many GenAI use cases stall not because of poor data, but thin data. Augmentation helps by:

Pulling from external datasets (e.g. firmographics, open market intelligence)

Using NLP to tag sentiment or intent in historical logs

Building out metadata layers for unstructured content (e.g. PDFs, emails)

More context = better prompts = more accurate and relevant outputs.

Data Governance: Cleaning Isn’t Just Tech, It’s Culture

You can’t outsource trust. According to a report by Precisely, data integrity and governance are key differentiators in enterprise AI maturity (Precisely, 2025).

Embed cleaning within a broader data governance model:

Assign data owners and escalation paths

Document all cleaning logic and assumptions

Establish reproducibility and audit trails

Align privacy and consent processes with GDPR or sectoral policy

Governance isn’t overhead. It’s the insurance policy that lets you scale GenAI without friction.

GenAI Is A Mirror. What Does It Reflect?

Your GenAI model is only as good as your data – because that’s all it has to learn from.

Clean internal data produces:

· Safer outputs

· Faster deployment

· Better user experience

· Lower regulatory risk

· More trust internally and externally

Dirty data, by contrast, gives the illusion of innovation. It generates artefacts that look impressive but don’t perform in reality. It risks bias, failure, and brand damage. And worst of all, it leads companies to blame the model, when the real issue was always upstream.

Four Practical Steps For Getting Ahead

If you're building GenAI capability (whether internally, for a portfolio company, or across a digital transformation) start here:

1. Make Data Cleaning Part of Your AI Roadmap

Data quality isn’t a precursor to GenAI. It’s part of the product. Scope it like a feature. Budget for it like a core system.

2. Invest in Observability and Governance

Use tools like Monte Carlo or Alation to surface issues, assign ownership, and enforce accountability.

3. Bridge Tech and Business Context

Put domain experts in the room during data cleaning workflows. Context is everything. Train your teams not just on tools - but on the implications of poor quality data.

4. Develop basic indicators to measure and evaluate data quality challenges.

This might include monitoring completion rates, identifying duplicate entries, or counting errors in key data fields. Such measurements give you a baseline understanding of data condition and help focus remediation efforts

At GAPx, we apply a structured, enterprise-wide lens to treating internal data before it ever reaches a GenAI interface. Our framework asks:

Quality: How accurate, consistent, and complete is the data?

Relevance: Does it align with business goals, user needs, and intended outcomes?

Holistic Approach: Is the data integrated across systems to reflect the full business and customer journey?

Timeliness: Is it available and relevant at the moment of use?

Integration: Are tools, platforms, and teams working in sync?

Security & Privacy: Are we handling sensitive data ethically and lawfully?

Bias & Diversity: Have we accounted for hidden biases, and do we understand their impact?

This is how we build trusted foundations for intelligent systems.

Final Thought: The Dirty Secret Of GenAI Is Dirty Data

In 2025, the winners in GenAI won’t be those with the most GPUs or the largest prompt libraries. It will be those with the cleanest, most trusted, most contextual data pipelines, feeding systems that know not just how to generate, but how to reason, differentiate, and act.

At GAPx, we’ve seen firsthand how neglected data quality derails transformation—and how quickly performance turns around when it’s made a priority. GenAI isn’t a magic wand. But clean data? That might be the closest thing.